Summary

TL;DR: ChatGPT killer use case is mental unblocking.

I participated in a number of discussions with “knowledge workers” about their experiences using ChatGPT in the workplace via the OpenAI UI.

Many non-technical users are finding it sufficiently useful as a writing aid that they imagine continuing its use, especially as it gets better. Use cases varied, from outlining video scripts to helping with product descriptions to generating job descriptions to general re-writes of documents. Many found it useful as an ideation tool due to its content diversity, having been trained on a truly super-massive set of examples.

The primary use case for ChatGPT is accelerating writing tasks where it’s easier to hack a prompt than think of what to write — or do — from scratch. It is a useful mental unblocking tool. The knack is learning how to write productive prompts as a kind of “programmatic writing” vs. “chatting”.

All those I spoke with encountered issues, such as hallucination. But this did not seem to present enough of a barrier to prevent usage.

Those competent at writing often did not rate the outputs as good enough to wholesale replace entire writing tasks, such as writing finished blog posts. It often has a tendency to produce unappealing unoriginal prose unless manipulated via an excess of prompt hacks. However, some claimed that such outputs still provided a better-than-nothing starting point.

Introduction

There’s much hype about ChatGPT, so I asked various folks what they have found it good at. Mostly I spoke with “knowledge workers”, including quite a few in creative professions (e.g. digital agencies). I did not speak to any who struggled to write to begin with.

What is ChatGPT?

For those unfamiliar, ChatGPT is a specially trained version of another AI model built by OpenAI, called GPT-3, which is a class of model called a Transformer. If you are a coder and want to know more, I provided a detailed code-level annotation on Github. For the rest of us, a Transformer is a giant computer program that spends a mind-blowing amount of time trying to guess how to complete sentences that it finds online.

This guessing game is a brute force attempt to learn language structure. It turns out that if done long enough over a big enough corpus, the program gets stupendously good at guessing which words go with which over very long spans of text, or long enough to subsequently generate quite productive prose. This is known as self-supervised learning because the program doesn’t need to be told (supervised) how to complete the task because the words it is trying to guess were already there: it simply masked them out in order to guess them.

What has surprised seemingly everybody, including AI experts and linguists, is how good this brute force method is when carried out a super-massive scale. And scale is seemingly what matters most, not any particular architectural sophistication.

The scale of the model allows it to learn a tremendous number of structural patterns over large spans of text. Once it has finished guessing, the trained model can be used to carry out other language-related tasks.

One such fine-tuned task is language generation. As you can imagine, a program that knows so much about which words go with which can complete entire sentences just by “guessing” the next most probable word, one at a time, over and over. This isn’t just a testament to the model’s scale, but a feature of language, namely that longer sequences are composed from smaller ones, which are composed from smallers ones (and so on), sometimes called recursion.

Language has this amazing creative aspect: take any starting word and there will be dozens, sometimes hundreds, or more, of potential next words. Over the span of a sentence, this gives rise to a mind-boggling number of novel combinations of words. Indeed, perhaps we don’t realize that many of the sentences we utter in our lifetime are entirely novel in the history of language. This creative aspect of language is considered by experts to be one of the great mysteries of the mind that is very hard to explain with a scientifically tractable theory. This is perhaps why it has surprised so many that a brute-force computer program has been able to detect enough structure in its training data to allow it to complete various language tasks with human or beyond-human performance.

The truly surprising aspect of pre-trained transformers is that not only can they generate a passage of text, but they can interpret an initial seeding sequence, called a prompt, such that it can be used as a kind of instruction as to how to generate the text. This makes the AI a kind of “programmatic writing” machine. (We shall return to this perspective.)

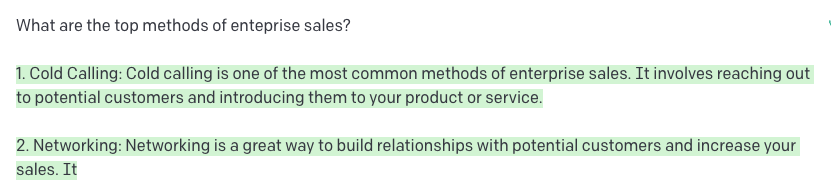

This seems like magic and has led many users to feel an illusory sense that the model “understands” what’s being requested. Here’s an example:

What’s happening here? It looks as if the program has understood the sentence and looked up the answer, like it might a database of questions. But that is not what’s happening. The program is generating the green text as a highly probable follow-on text to the prompt — highly probably in the sense of all the vast swathes of text it has seen whilst training and which of those in which combinations might plausibly follow from the prompt. Think of it this way: if we were to look at all the text on the web and find that prompt, or something similar, then most likely we would see a list of sales methods afterwards.

Let me be clear: it is generating that green text. It is not trying to look it up from the training corpus. It doesn’t know that this is the correct answer — there is no “correctness” or “truth” inherent in the program. Indeed, it does not understand any of the words in the prompt either. It is blindly performing a function: given the input sequence, what is a highly probable output sequence. The output is only plausible, and potentially truthful, because the words it saw (in relation to the prompt) during training are plausible and contain truths, facts (or not) and useful explanations and descriptions.

And this is where scale comes into the picture. If the program sees enough examples of text, as in a truly super-massive corpus, then it will have seen enough examples of this question, or what it represents, such that its answers are so probable as to overlap with common experience or useful information as available on the web and in various books.

Representation is key, as this is what the model learns. For example, the model will learn the pattern “What…[plural nouns]…?” has a high probability of being followed by a numerical list. We can tell this by repeating the pattern and swapping out the meaning:

What is it good for? Unblocking.

Now we know what it is and how it works, what is it good for? Here I participated in a number of discussions, in-person and via forums, with various knowledge workers who had tried to use the tool in earnest to do useful work. I only asked about uses of the raw OpenAI interface, not any specialist application like the AI Copywriter tools built using GPT.

Everyone I spoke to found it useful for something. This is not surprising given its super-massive scale. It is hard to stump it, even, apparently with very esoteric questions of the kind that philosopher and quantum physicist David Deutsch has been posting on his twitter account.

Many folks were surprised by how good the tool was in getting a response that proved useful. A senior content manager at a high-end digital agency told me how he had experimented with generating script outlines for corporate videos. Having recently done some consulting related to online retail in the Metaverse, I tried something similar with a prompt: Write me a corporate video script outline for a person whose shopping experience is enhanced by shopping in the metaverse. The output followed a coherent structure with a meaningful narrative. For someone unrehearsed in script writing, it provided a useful starting point. For professional script writers, it probably isn’t so useful, except, perhaps, for ideation of scenes.

A consultant friend of mine tried to write blog posts about innovation, his area of expertise. He found the outputs coherent as a blog post, but a bit flat, lacking any originality. Indeed, it looked trite. This is to be expected if the tool is basically trying to find the most probable text.

But, like all probabilities, they can be constrained, or made conditional. This is the art of refining the prompt. In that same conversation, another innovation consultant reworded the prompt to generate something more convincing. We all concluded that it was still not good enough to warrant publication (in the name of the original consultant) but some of the participants believed that it was far enough along to get there via editing, even using ChatGPT to suggest new edits on a paragraph or sentence basis.

Despite some believing that this prompt-manipulation will become unnecessary with new versions of the tool, this seems an unlikely prospect. This is because language is, no matter how it is generated, ambiguous and able to convey many different voices, tones and ideas with even subtle rewording.

It was interesting to watch a live session of a Gen-Z user “hack” the prompts back and forth to generate a LinkedIn bio for a friend. It was interesting to watch this younger user take to the tool like it was how writing had always been done. Of those involved in the process, we all agreed that the final version was better worded than the author’s original bio. This hacking on a theme to get a final result seems like it could be skill, but one that is more powerful in the hands of someone with a good command of language to begin with, but nonetheless unaware of what a good LinkedIn bio might look like until they saw it, versus thinking of it entirely from scratch.

And this seemed to be the killer use case: hacking on a prompt to converge upon productive content without the pain of filling the void of blank paper — a kind of mental unblocker, as it were. Indeed, a professional writer friend of mine told me how some of his fellow fiction writers were using it often to “fill the gaps” with hard to find narrative turns or adjectives or scene ideas.

Using ChatGPT: Programming words with words

I am not going to offer a long list of tips and instructions, but rather an insight into how to use ChatGPT productively. And it’s an insight that all of the correspondees discovered for themselves, also widely discussed on the Web — e.g. see this article by Wharton Professor Ethan Mollick.

The trick is to understand what I explained above about how ChatGPT works, as in it is able to consult a truly super-massive corpus to understand how to construct plausible, or highly probable, sequences. This means it is capable of detail and nuance, but only if you instruct it with specific detail and nuance to begin with.

Users learn that ChatGPT is a kind of “programming words using words” versus a mind-reading “Oracle” — and yes, some folks use it in that latter way and get disappointing results. Part of the programming can be the inclusion of lots of specific detail, even as bullet points, without attempting to construct a prompt that another human might find meaningful, per se.

Consider a generic prompt like: Write me an AI strategy. This will likely produce the kind of generic or trite response expected, like identify a problem, find the data, select a model, train it and use it etc. But something more elaborate might produce more interesting responses, like: Why should a CEO of a mature company that is struggling in the marketplace care about the manifold hypothesis?

You’d have to know that the manifold hypothesis is related to AI, but this is a more nuanced prompt that generates a more nuanced response related to the nature of high-dimensionality data.

The best way to discover this “programming words with words” is to experiment. Take you baseline prompt and mix-in a lot of details, perhaps quirky and tangential ones, just to understand how the machine works.

Issues

Unsurprisingly, given that we know how Transformers work (brute force probability machines), we expect some of the outputs not to meet the mark. To recap, the model does not attempt to “make sense” of the prompt, but rather looks at the sequence and, based upon patterns found it it, at many levels (morphologically, syntactically, semantically) computes the most probable follow-on sequence based upon a super-massive scale of prior examples (of the morphological representations, not necessarily the exact wording of the prompt).

The model can easily produce incoherent output, which has been documented by many. They can also hallucinate facts or fail to generate prompts that are well reasoned based upon the prompt.

However, I didn’t find anyone who was attempting to use it as a general knowledge bot, like a Siri. Indeed, it was well understood that this wasn’t a wise use case, nor anything that would involve having to subsequently check all factual claims in the generated text.

Also, that friend of mine who tried to write an innovation post, also used another AI model to detect if the text had been generated. It detected with high confidence that the text had been generated. Whilst it’s not clear how these detection scripts work, the potential for having content detected as fake could be worrying, especially if it gets dinged by Google Search.

Nonetheless, as said, most of the users I spoke to were not attempting to generate wholesale content for publication online, but these various writer’s companion tasks to accelerate production of content, knowledge, ideas and format etc.

Where Next

Of course, pre-trained Transformers can do lots of other things, like summarize text or answer questions from a text. They can be used to analyze different document types, like invoices or business contracts, or even generate them. Perhaps they can find clauses in contracts that are likely to lead to poor value or lack of compliance, and so on. When it comes to the technical use of the underlying technology, suddenly many business processes become amenable to automation. However, this wasn’t the subject of my inquiry, but the use of ChatGPT as a writer’s companion. For that, it appears to have sufficient value that it might be expected to enhance knowledge-worker productivity over time.

And no — none of this article was written using ChatGPT (except the indicated examples).

If you’d like to know more about how to use GPT-like technology in your company to uniquely add value, feel free to reach out.